Claude 3 Haiku: our fastest model yet

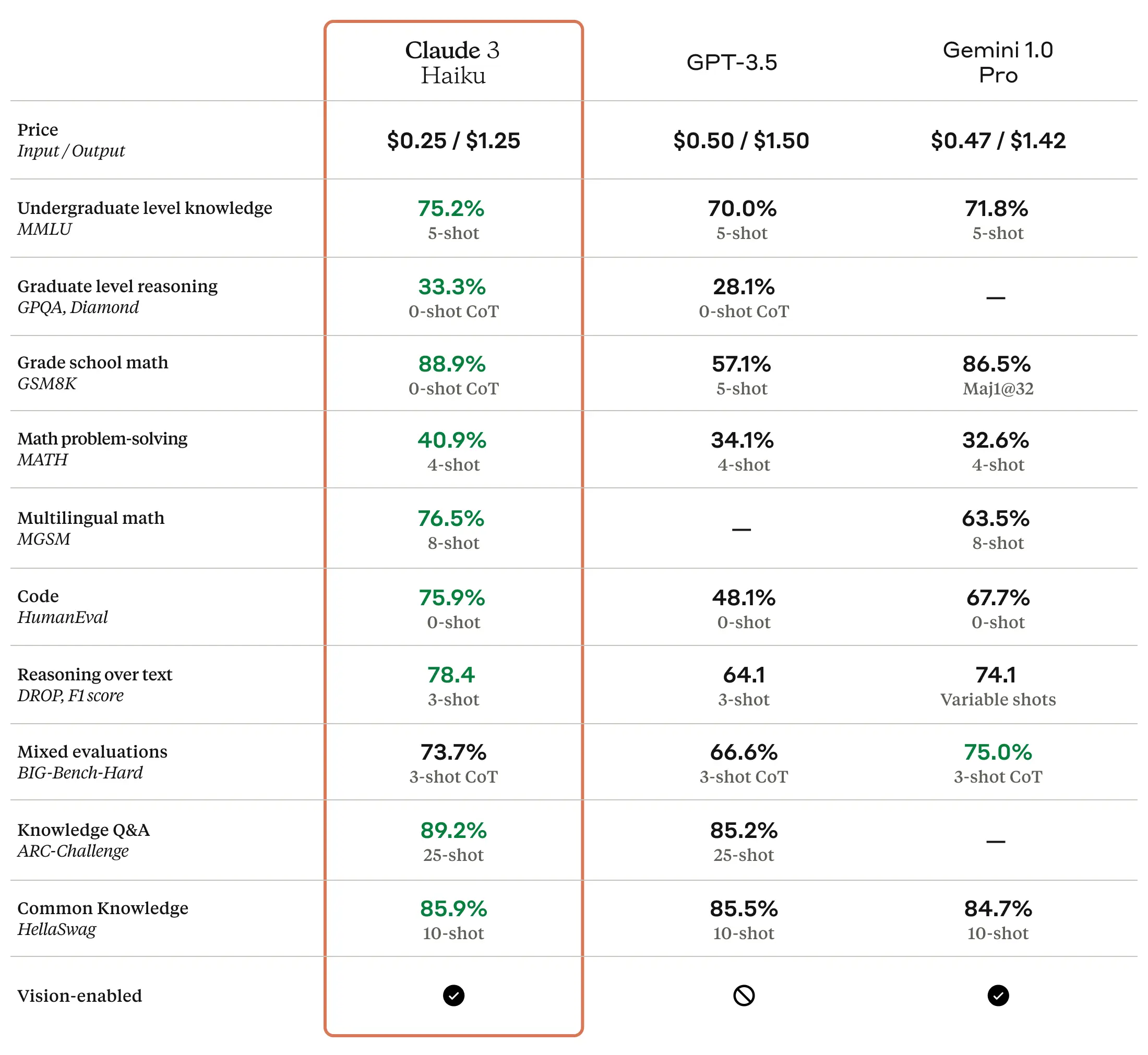

Today we’re releasing Claude 3 Haiku, the fastest and most affordable model in its intelligence class. With state-of-the-art vision capabilities and strong performance on industry benchmarks, Haiku is a versatile solution for a wide range of enterprise applications. The model is now available alongside Sonnet and Opus in the Claude API and on claude.ai for our Claude Pro subscribers.

Speed is essential for our enterprise users who need to quickly analyze large datasets and generate timely output for tasks like customer support. Claude 3 Haiku is three times faster than its peers for the vast majority of workloads, processing 21K tokens (~30 pages) per second for prompts under 32K tokens [1]. It also generates swift output, enabling responsive, engaging chat experiences and the execution of many small tasks in tandem.

Haiku's pricing model, with a 1:5 input-to-output token ratio, was designed for enterprise workloads which often involve longer prompts. Businesses can rely on Haiku to quickly analyze large volumes of documents, such as quarterly filings, contracts, or legal cases, for half the cost of other models in its performance tier. For instance, Claude 3 Haiku can process and analyze 400 Supreme Court cases [2] or 2,500 images [3] for just one US dollar.

Alongside its speed and affordability, Claude 3 Haiku prioritizes enterprise-grade security and robustness. We conduct rigorous testing to reduce the likelihood of harmful outputs and jailbreaks of our models so they are as safe as possible. Additional layers of defense include continuous systems monitoring, endpoint hardening, secure coding practices, strong data encryption protocols, and stringent access controls to protect sensitive data. We also conduct regular security audits and work with experienced penetration testers to proactively identify and address vulnerabilities. More information about these measures can be found in the Claude 3 model card.

Starting today, customers can use Claude 3 Haiku through our API or with a Claude Pro subscription on claude.ai. Claude 3 Haiku is available on Amazon Bedrock and will be coming soon to Google Cloud Vertex AI.

Footnotes

[1] Prompts containing over 32K tokens may experience 30-60% slower ingestion speeds, which we expect to improve in the coming weeks. Customers may also experience additional latency when processing images.

[2] Each Supreme Court case is estimated at 10K tokens each. Source.

[3] Each image is estimated at 1.6K tokens.

Related content

Covering electricity price increases from our data centers

Read moreIntroducing Claude Opus 4.6

We’re upgrading our smartest model. Across agentic coding, computer use, tool use, search, and finance, Opus 4.6 is an industry-leading model, often by wide margin.

Read moreClaude is a space to think

We’ve made a choice: Claude will remain ad-free. We explain why advertising incentives are incompatible with a genuinely helpful AI assistant, and how we plan to expand access without compromising user trust.

Read more