Anthropic Education Report: How educators use Claude

Understandably, much of the conversation of AI in education focuses on how students are using large language models to help them study and write. But educators use AI too. In a recent Gallup survey, teachers reported that AI tools saved them an average of 5.9 hours per week. And in an inversion of the usual discussion, students have begun expressing concerns about professors using AI in the classroom.

We previously reported data on how students were using AI. Our new analysis looks at professors: we analyzed ~74,000 anonymized conversations from higher education professionals across the world on Claude.ai this past May and June.1 We also partnered with Northeastern University to hear directly from faculty how they were using AI within the university. Our findings provide an empirical snapshot of educator AI adoption, specifically in university settings.

We find that:

| Educators use AI in and out of the classroom Educators’ uses range from developing course materials and writing grant proposals to academic advising and managing administrative tasks like admissions and financial planning. |

| Educators aren't just using chatbots; they're building their own custom tools with AI Faculty are using Claude Artifacts to create interactive educational materials, such as chemistry simulations, automated grading rubrics, and data visualization dashboards. |

| Educators tend to automate the drudgery while staying in the loop for everything else Tasks requiring significant context, creativity, or direct student interaction—like designing lessons, advising students, and writing grant proposals—are where educators are more likely to use AI as an enhancement. In contrast, routine administrative work such as financial management and record-keeping are more automation-heavy. |

| Some educators are automating grading; others are deeply opposed In our Claude.ai data, faculty used AI for grading and evaluation less frequently than other uses, but when they did, 48.9% of the time they used it in an automation-heavy way (where the AI directly performs the task). That’s despite educator concerns about automating assessment tasks, as well as our surveyed faculty rating it as the area where they felt AI was least effective. |

Identifying educators’ use of Claude

In this research, we used our automated analysis research tool that reveals broad patterns of Claude usage while protecting users’ privacy.

Studying higher education professionals’ use of Claude.ai presents unique challenges, as we don’t currently collect self-reported occupational data on our platform. Unlike students who often explicitly mention coursework or assignments, educators’ AI interactions span teaching, research, administration, and personal learning, making them harder to identify and categorize.

Using our privacy-preserving tool, we analyzed conversations from Claude.ai Free and Pro accounts associated with higher education email addresses and then automatically filtered conversations for educator-specific tasks—such as creating syllabi, grading assignments, or developing course materials.2 This filtering yielded approximately 74,000 conversations from a period in May and June. Our analysis should be viewed as an exploration of how educators use AI for profession-specific tasks, not a comprehensive view of all educator AI usage.

We also matched each conversation to the most appropriate task from the comprehensive list of educator tasks in the O*NET database of occupational information from the U.S. Department of Labor. We identified educator tasks as tasks associated with “Postsecondary” teaching or administrative occupations.

We complemented our analysis with survey data and qualitative research from 22 Northeastern University faculty members who are early adopters of AI to shed light on educators' motivations, concerns, and usage patterns.

Common uses among educators

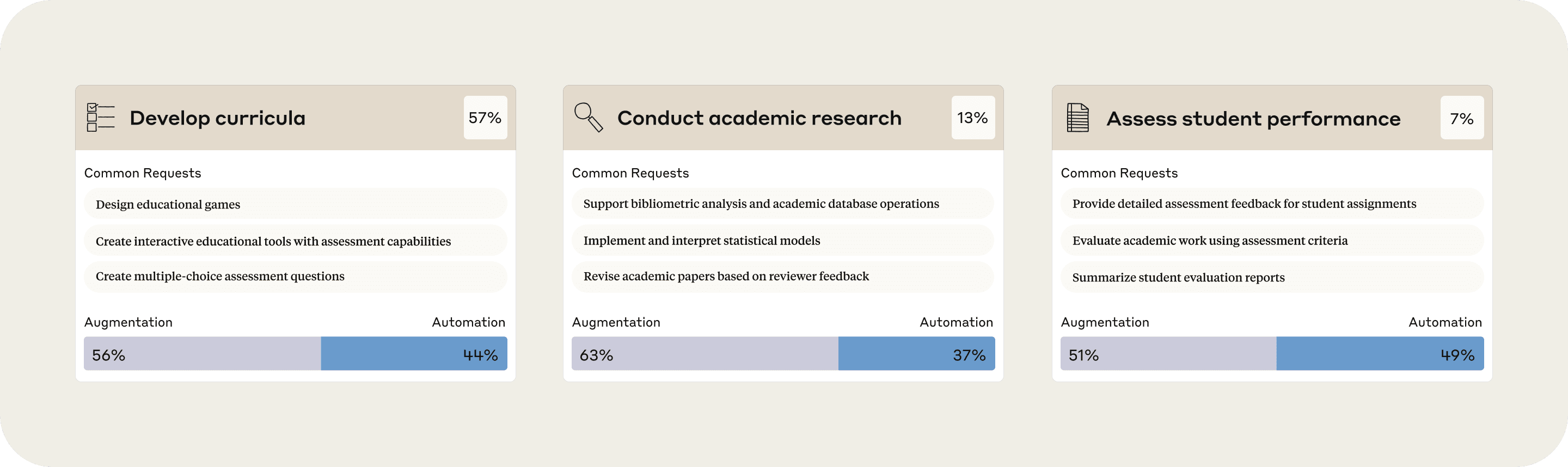

The most prominent use of AI, as revealed by both our Claude.ai analysis and our qualitative research with Northeastern, was for curriculum development. Our Claude.ai analysis also surfaced academic research and assessing student performance as the second and third most common uses.

In our surveys, Northeastern faculty reported that another common case was using AI for their own learning (29% of their AI time on average). However, this was not studied in our Claude.ai analysis, given the filtering mechanism and the difficulty of distinguishing between student and educator usage in these learning instances.

Some other particularly interesting uses we discovered in the Claude.ai data include:

- Create mock legal scenarios for educational simulations;

- Develop vocational education and workforce training content;

- Draft recommendation letters for academic or professional applications;

- Create meeting agendas and related administrative documents.

Why faculty use AI in these cases

Our qualitative research with Northeastern faculty hints at why educators often gravitate towards these common AI uses:

- Automation of a tedious task (“It takes care of the tedious tasks”; helps with “rote portions of fundraising”);

- Collaborative thought partner (“AI can find effective ways to explain concepts to students that I had not thought of myself”);

- Personalized learning experiences for students (“AI is useful for giving students and me individualized, interactive learning experiences beyond what one instructor could provide”).

How educators are building custom tools with AI

One of the most inspiring findings is how educators use Claude's Artifacts feature to create interactive educational materials. Rather than just having conversations, educators are often building complete, functional resources that in some cases they can immediately deploy in their classrooms.

As one surveyed Northeastern faculty member put it: “What was prohibitively expensive (time) to do [before] now becomes possible. Custom simulation, illustration, interactive experiment. Wow. Much more engaging for students.”

Key creations built by educators

| Interactive educational games: web-based games including escape rooms, platform games, and simulations that teach concepts through gamification across various subjects and levels |

| Assessment and evaluation tools: HTML-based quizzes with automatic feedback systems, CSV data processors for analyzing student performance, and comprehensive grading rubrics |

| Data visualization: interactive displays to help students visualize everything from historical timelines to scientific concepts |

| Subject-specific learning tools: specialized resources like chemistry stoichiometry games, genetics quizzes with automatic feedback, and computational physics models |

| Academic calendars and scheduling tools: interactive calendars that can be automatically populated, downloaded as images, or exported as PDFs for displaying class periods, exam times, professional development sessions, and institutional events |

| Budget planning and analysis tools: budget documents for educational institutions with specific expense categories, cost allocations, and budgetary management tools |

| Academic documents: meeting minutes, emails for grade-related communications and academic integrity issues, recommendation letters for faculty awards, tenure appeals, grant applications, interview invitations, and committee appointments |

This goes beyond just Claude. One professor described how new AI tools in general enable them to “translate [their] own content into more accessible / engaging forms (interactive pages, simulation, podcast, video).”

These creations represent a shift from AI as conversational assistant to AI as creative collaborator, enabling educators to produce personalized educational materials that might traditionally require significant technical expertise or resources.

The augmentation-automation spectrum

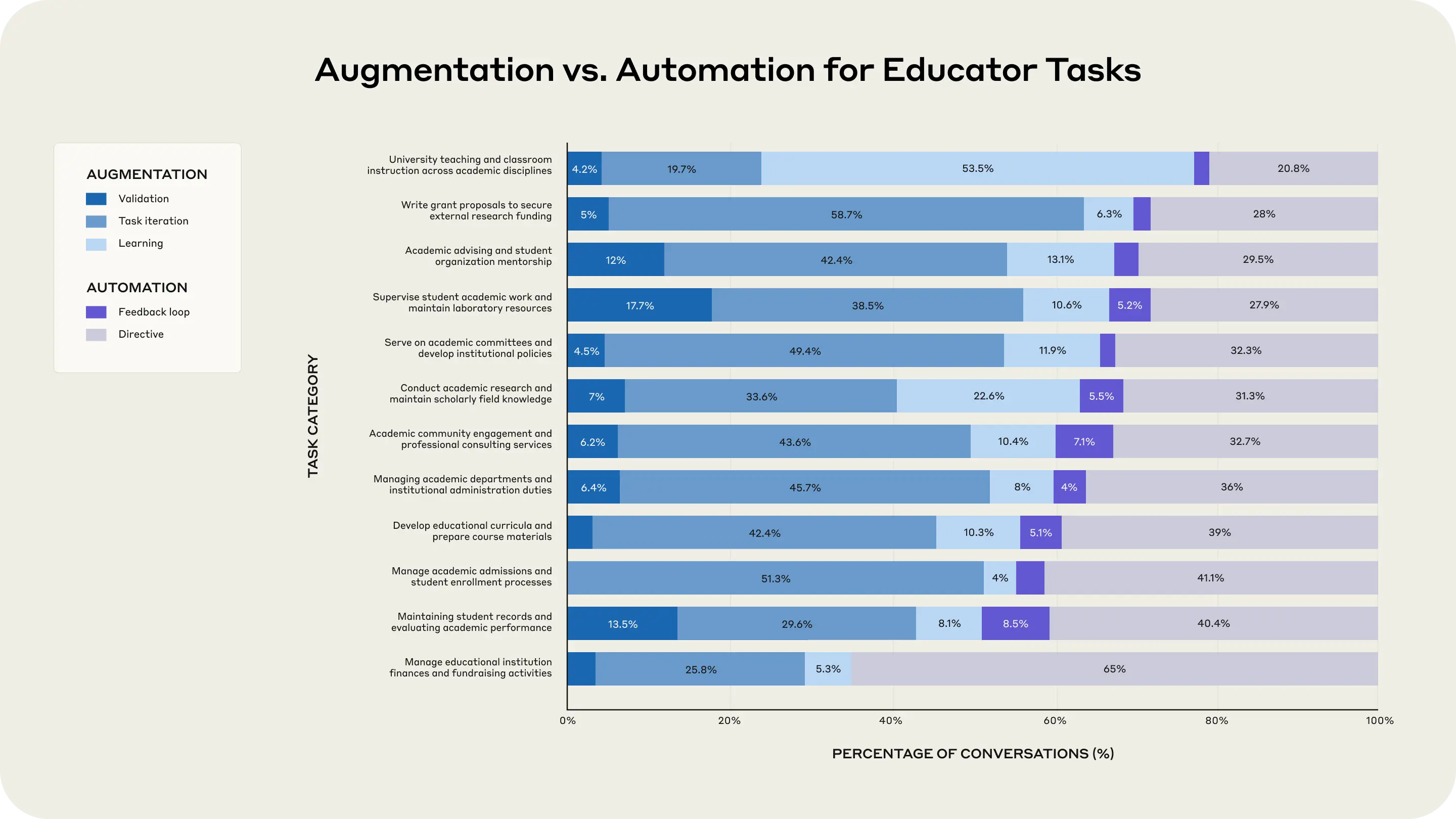

Our analysis reveals a nuanced picture of how educators balance AI augmentation (collaborative use) versus automation (delegating tasks entirely), building upon Anthropic’s prior work on the Economic Index.

Key patterns emerge across different educational tasks in the Claude.ai data:

Tasks with higher augmentation tendencies:

- University teaching and classroom instruction, which includes creating educational materials and practice problems (77.4% augmentation);

- Writing grant proposals to secure external research funding (70.0% augmentation);

- Academic advising and student organization mentorship (67.5% augmentation);

- Supervising student academic work (66.9% augmentation).

Tasks with relatively higher automation tendencies:

- Managing educational institution finances and fundraising (65.0% automation);

- Maintaining student records and evaluating academic performance (48.9% automation);

- Managing academic admissions and enrollment (44.7% automation).

This variation demonstrates that educators’ likelihood to delegate entirely to the AI depends on the task. Aligned with our survey’s results, we see that tasks involving routine administrative and financial management are more likely to be fully delegated than tasks close to direct student interaction (such as creating practice materials or advising on doctoral-level academic research). These AI interactions often require significant context and thus collaboration between AI and professor. For example, as one Northeastern professor put it, when designing lesson plans, “AI needs guidance on the level of material and context with regard to what we have already covered.”

Educators also seem more likely to use AI in an augmentative manner for work requiring creativity or complex decision-making, such as writing grant proposals. When brainstorming, one surveyed professor wrote,

“It's the conversation with the LLM that's valuable, not the first response. This is also what I try to teach students. Use it as a thought partner, not a thought substitute.”

That said, 48.9% of grading-related conversations being identified as automation-heavy remains concerning. Although surveyed professors thought this was the single task that AI was least effective at, it was seen in the Claude.ai data. And even if this represents only 7% of the Claude.ai conversations we studied, it emerged as the second most automation-heavy task. This includes sub-tasks like providing feedback on student assignments and grading their work using rubrics. While it’s not clear to what degree these AI-generated responses factor into the final grades and feedback, the interactions surfaced by our research do show some amount of delegation to Claude.

Using AI in grading remains a contentious issue among educators. One Northeastern professor shared: “Ethically and practically, I am very wary of using [AI tools] to assess or advise students in any way. Part of that is the accuracy issue. I have tried some experiments where I had an LLM grade papers, and they're simply not good enough for me. And ethically, students are not paying tuition for the LLM’s time, they're paying for my time. It's my moral obligation to do a good job (with the assistance, perhaps, of LLMs).”

While there are ways AI feedback can support a student’s development, such as through automatic systems providing formative feedback (e.g. those being built by educators in Claude Artifacts), most educators seem to agree that grading shouldn’t be anywhere close to fully automated.

How educators are rethinking what to teach

Many educators recognize that AI tools are changing the way students learn. That in turn puts pressure on educators to change the way they’re teaching. As one surveyed professor put it:

“AI is forcing me to totally change how I teach. I am expending a lot of effort trying to figure out how to deal with the cognitive offloading issue.”

It’s also changing what professors are teaching. In coding, for example, according to one professor, “AI-based coding has completely revolutionized the analytics teaching/learning experience. Instead of debugging commas and semicolons, we can spend our time talking about the concepts around the application of analytics in business.”

More broadly, the ability to evaluate AI-generated content for accuracy is becoming increasingly important. “The challenge is [that] with the amount of AI generation increasing, it becomes increasingly overwhelming for humans to validate and stay on top,” one professor wrote. Professors are keen to help their students build enough expertise in a subject area to have this discernment.

Assessments also are starting to look different. While student cheating and cognitive offloading remain a concern, some educators are rethinking their assessments.

“If Claude or a similar AI tool can complete an assignment, I don’t worry about students cheating; I [am] concerned that we are not doing our job as educator[s].”

In one particular Northeastern professor’s case, they shared that they “will never again assign a traditional research paper” after struggling with too many students submitting AI-written assignments. Instead, they shared: “I will redesign the assignment so it can't be done with AI next time. I had one student complain that the weekly homework was hard to do and they were annoyed because Claude and ChatGPT was useless in completing the work. I told them that was a compliment, and I will endeavor to hear that more from students.”

One path forward may be to uplevel assignments based on these newfound tools and expect students to tackle more complex, real-world challenges that remain difficult even with AI assistance. However, this is a moving target given AI’s continual improvements and may put a significant burden on the educators themselves. Additionally, students still need to develop foundational skills independently of AI to effectively evaluate its outputs.

Limitations and considerations

This research comes with important caveats:

- Identification methodology: Our filtering, which looked at Claude conversations to infer which were associated with educators, captured only ~1.5% of conversations from higher education emails, limiting us to tasks explicitly linked to educators (e.g. creating syllabi) and likely missing many other educator AI interactions that aren’t exclusively linked to educators (e.g. getting help explaining a difficult concept);

- Limited educator scope: Analysis restricted to accounts with higher education email addresses, excluding K-12 teachers;

- Early adopter bias: We're likely capturing educators already comfortable with AI who may not represent the broader educator population's technological readiness or attitudes;

- Survey limitations: Northeastern University faculty data provides qualitative context but represents a limited sample from a single institution that may not generalize;

- Platform specificity: This analysis focuses on Claude.ai usage and may not reflect patterns on other AI platforms;

- Temporal constraints: The analysis window of May and June does not capture seasonal variations in educator AI usage throughout the academic year.

Looking ahead

Our findings reveal a complex picture of educator AI adoption. The diversity of applications—from building interactive simulations to managing administrative tasks—shows AI's expanding presence across academic functions.

Perhaps most encouraging is how educators are using AI to build tangible educational resources. This shift from AI as a conversational tool to AI as a creative partner could help address longstanding resource constraints in education. As one professor noted, custom simulations and interactive experiments that were once “prohibitively expensive” in terms of time are now possible, creating more engaging experiences for students.

However, there remains tension around AI-assisted grading. Whereas nearly half of grading-related tasks showed automation patterns in our data, surveyed faculty rated this as AI's least effective application. This disconnect—between what's being attempted and what's viewed as appropriate—highlights the ongoing struggle to balance efficiency gains with educational quality and ethical considerations.

These findings suggest that narratives around AI in education will continue evolving alongside the technology itself. Educator views on appropriate AI use, particularly for sensitive tasks like grading, may shift as tools improve and best practices emerge. Equally important for future research is understanding how student and educator AI usage interact—how do students perceive and respond when they know their professors are using AI? How does educator adoption influence student learning behaviors?

Our research captures educators in a moment of active experimentation, building new possibilities while grappling with fundamental questions about their role in an AI-augmented classroom. The path forward will require ongoing dialogue, careful policy development, and continued research to ensure these tools enhance rather than compromise the educational experience.

Bibtex

If you'd like to cite this post, you can use the following Bibtex key:

@online{benthand2025education,

author = {Drew Bent and Kunal Handa and Esin Durmus and Alex Tamkin and Miles McCain and Stuart Ritchie and Ryan Donegan and Jennifer Martinez and Jason Jones},

title = {Anthropic Education Report: How Educators Use Claude},

date = {2025-08-26},

year = {2025},

url = {https://www.anthropic.com/news/anthropic-education-report-how-educators-use-claude},

}Acknowledgements

Drew Bent* and Kunal Handa* designed and executed the experiments and wrote the blog post.

Esin Durmus, Alex Tamkin, Miles McCain, Stuart Ritchie, Jennifer Martinez, Ryan Donegan, and Jason Jones provided valuable feedback and discussion.

Footnotes

1 The conversations took place during an 11-day period from May 22 to June 2, 2025.

2 Specifically, we used the following filter, powered by Claude, to identify educator-relevant conversations: “Is this conversation likely to be with an educator (teacher, professor, or instructor) seeking help with instructional content, grading, research, or administrative duties? Make sure to not include students doing their own coursework, research papers, etc. Err on the side of conservatism and assume it's not an educator if you're not sure.”

*In cases where the augmentation/automation category could not be identified, we excluded those from the chart. For more information on these categories, please see our Anthropic Economic Index research.